The acceleration of AI integration into core business operations has transformed the foundational large language model (LLM) debate from a technical curiosity into a critical strategic decision. For businesses in Charlotte, NC, and beyond, choosing the right underlying AI engine dictates the potential speed, scale, and security of their automation initiatives. The head-to-head competition between Anthropic’s Claude and OpenAI’s ChatGPT is no longer about who can write the best email; it’s about which platform delivers the highest business value for complex tasks like enterprise automation and agentic coding.

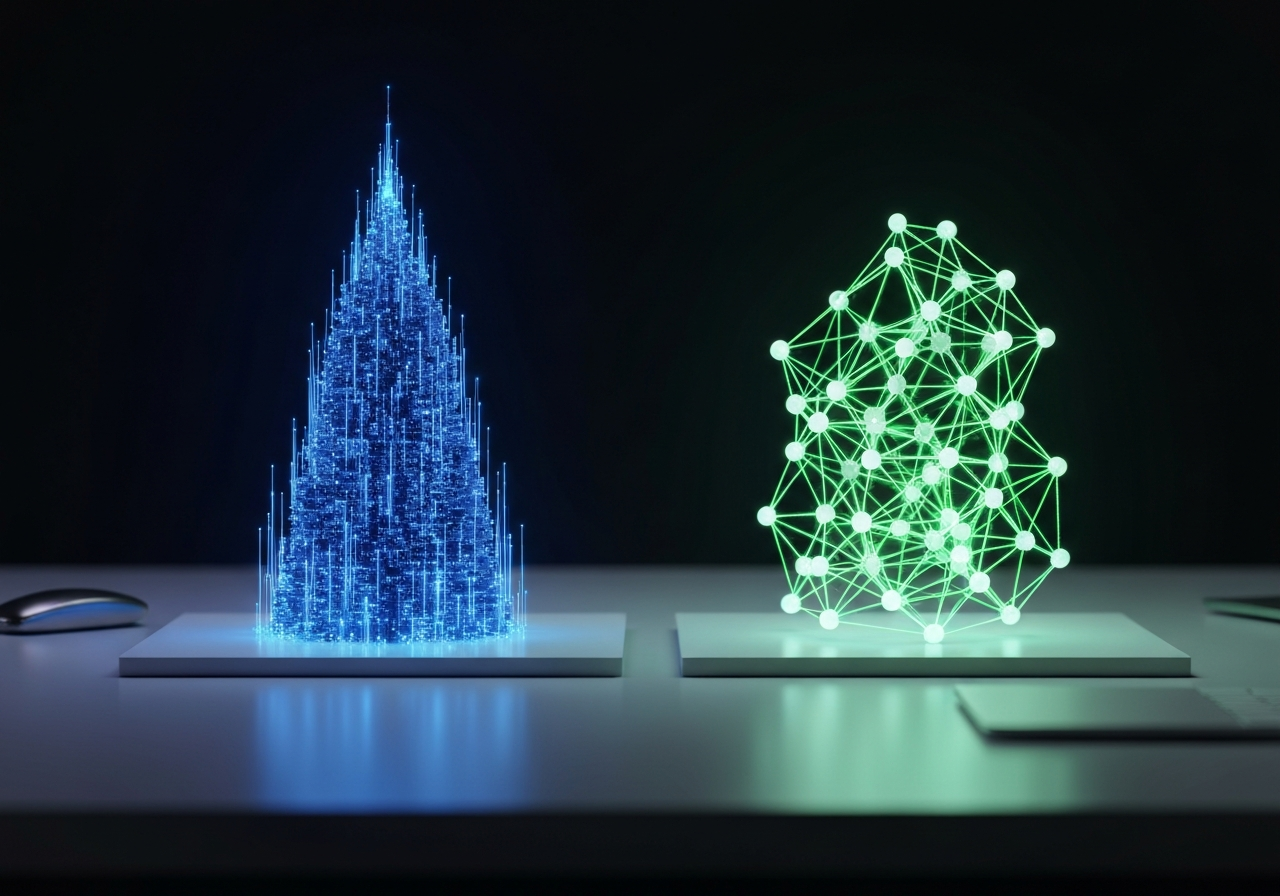

Understanding this strategic choice—the question of Claude vs. ChatGPT—is essential for any organization seeking to implement reliable, context-aware AI agents into their infrastructure. While both models demonstrate parity in general capability, their architectural differences, especially concerning context windows, data privacy, and integration ecosystems, make one model inherently better suited for specific enterprise use cases than the other.

Setting the Stage: Why the Claude vs. ChatGPT Debate Matters for AI Automation

The primary concern for modern businesses isn’t the chatbot interface; it’s the capability of the AI model as an engine for automation platforms like n8n or Python/FastAPI backends. An AI agent is an autonomous piece of software that can observe its environment, decide on a course of action, and execute that action. When an agent is empowered to use tools or write code, its reliability becomes paramount. This is where the core philosophical differences between Anthropic and OpenAI’s offerings reveal themselves.

Claude’s Value Proposition: Security and Context Depth

Anthropic, founded by former OpenAI executives, has built its framework around "Constitutional AI," prioritizing safety, consistency, and reducing the risk of harmful outputs. This focus translates into enterprise features like strong access governance and a default policy of not training models on user data, making Claude a preferred choice for companies with strict security and audit requirements.

ChatGPT’s Value Proposition: Versatility and Ecosystem Breadth

OpenAI’s ChatGPT, powered by models like GPT-4o, offers a broader, more versatile toolset, including multimodal capabilities (image/audio processing) and an extensive ecosystem of custom GPTs and plugins. This versatility often makes ChatGPT the better option as a general "automation hub" across a wide array of business systems.

The Context Window Advantage: Handling Enterprise Data for Strategic AI Workflows

In the world of professional AI, the size of the model’s "working memory," or context window, is often the single most important factor for handling enterprise-scale tasks. A larger context window allows the model to process massive documents, maintain coherence over long, multi-step conversations, and analyze entire codebases in a single prompt.

Claude currently holds a significant advantage in context handling for high-tier enterprise users:

- Claude’s Massive Context: While standard Claude 4 models support a 200,000-token context window (roughly 150,000 words), Anthropic has rolled out beta access for a 1-million token context window for eligible organizations. The Claude Enterprise plan also offers an expanded 500,000-token window, which equates to processing "hundreds of sales transcripts, dozens of 100-page documents, and 200,000 lines of code" in a single, sustained analytical task.

- Strategic Benefit: For businesses focused on complex tasks like summarizing quarterly reports, performing deep regulatory analysis on large legal texts, or refactoring massive legacy codebases with deep context awareness, Claude’s exceptional context capacity is often the decisive factor.

While ChatGPT models are highly capable, Claude’s leading context size, combined with features like "Context Awareness" in its newer models—which informs the AI of its remaining token budget to ensure sustained focus—gives it a distinct edge in data-heavy and long-running analytical workflows.

The Agentic Leap: Comparing Model Strengths for Agentic Coding and Autonomous Tasks

The next frontier in AI automation is the development of autonomous AI agents that can perform multi-step tasks—including writing, running, and testing code—without constant human intervention. Both companies have dedicated AI coding tools, but their philosophies differ significantly:

Claude Code: Developer-Guided, Local Control

Claude Code is Anthropic’s command-line interface tool designed for agent-based programming. It emphasizes a "developer-in-the-loop" philosophy:

- Deep Local Integration: Claude Code runs directly in the developer’s terminal, offering deep, contextual awareness of the entire local codebase.

- Interactive Workflow: The model makes coordinated changes across multiple files based on user prompts, but critical changes often require explicit developer approval, keeping the human in control.

- Best Use Case: Ideal for developers who need an expert collaborator for deep code analysis, debugging large repositories, and guided refactoring tasks where local context and control are essential.

OpenAI Codex: Autonomous Cloud Delegation

OpenAI’s approach with its AI coding agent, branded as Codex, leans toward delegation and asynchronous work:

- Cloud Sandbox Environment: The cloud agent runs tasks autonomously within isolated sandboxes, pre-loaded with the repository.

- Delegation Workflow: It is designed for delegating end-to-end coding tasks, making coordinated changes, generating logs for evidence of actions, and even submitting pull requests automatically for human review.

- Best Use Case: Superior for teams looking to automate entire development workflows, run independent tasks asynchronously, and integrate AI into cloud-based CI/CD pipelines.

For organizations, the choice between these two powerful tools hinges on their development methodology: choose Claude Code for interactive, expert collaboration, or choose Codex for autonomous task delegation and scalability.

Integration Power: Which Model Excels in N8N Workflows and API-First Systems?

A great LLM is useless to a business if it cannot be integrated into existing workflow automation platforms. Both Claude vs. ChatGPT integrate with n8n—the leading workflow automation platform for technical teams—but they do so with subtle differences that impact implementation and use.

Claude and n8n: The Power of the Model Context Protocol

Claude’s API is straightforward, built for developers to construct adaptable and scalable workflows. Integration with n8n is achieved using the HTTP Request node for custom API calls. This simple, API-first approach means developers can utilize Claude’s superior long-context reasoning within their n8n workflows for tasks such as:

- Processing a massive CSV file within an n8n workflow using Claude’s 500K context to find anomalies.

- Having a Claude AI agent trigger actions (like updating a record or sending an email) after analyzing a document pulled by another n8n node.

ChatGPT and n8n: Multimodality and Versatility

ChatGPT’s integration strength lies in its expansive existing ecosystem. While it also uses API calls for n8n workflows, its core models (like GPT-4o) and capabilities offer more versatility:

- Multimodality: GPT-4o’s native support for image and audio processing means an n8n workflow could, for instance, analyze an image uploaded by a user before routing the data to a business system.

- GPTs and Actions: The platform allows custom-built GPTs (custom assistants) to be incorporated into automation strategies, potentially giving them "arms and legs" to interact with other apps via n8n’s webhook and action capabilities. This creates a powerful synergy for complex operations.

Ultimately, a business might leverage both. Many organizations find themselves building workflows in n8n where the initial analysis is handled by Claude for its superior reasoning on long documents, and the resulting structured output is then processed by a different node that uses the OpenAI API for final-mile tasks, such as generating an image or a specific code snippet.

The Specialization Factor: Tailoring Claude vs. ChatGPT for Custom CRM Development

For small to medium-sized businesses and enterprises in areas like Charlotte, NC, that require custom business platforms—such as a proprietary CRM, ERP, or advanced e-commerce solution—the choice between the two LLMs becomes highly specialized. This is where Idea Forge Studios often sees the most nuanced debates about strategic AI implementation.

Use Case 1: Complex Customer Service (Claude’s Edge)

When building an AI agent to handle customer service inquiries by referencing thousands of pages of internal documentation (knowledge base articles, past support tickets, product manuals), Claude’s exceptional context window is invaluable. The ability to load a huge volume of company-specific knowledge into the model’s working memory ensures that the AI provides highly accurate, consistent, and policy-compliant answers, which is crucial for building trust and maintaining brand integrity. This is the bedrock of authoritative customer engagement.

Use Case 2: Multi-Channel Marketing & Sales Automation (ChatGPT’s Edge)

For systems that require constant, real-time interaction across various external tools—like synchronizing customer conversations from Slack, interpreting visual data from marketing reports, or generating dynamic content based on live web searches—ChatGPT’s API versatility and multimodal capabilities often make it a more seamless choice. Its existing deep developer ecosystem and custom GPT tools simplify the process of extending the AI’s functionality to interact with external WordPress or custom PHP backends.

Strategic Development Considerations

When developing custom solutions, the right model selection directly influences system architecture:

| Feature | Claude Model (Enterprise Focus) | ChatGPT Model (Versatility Focus) |

|---|---|---|

| Data Privacy & Security | Higher-level of enterprise-grade security and optional private deployment (AWS/Google Cloud Vertex AI). | Relies on default API security protocols; custom GPTs and enterprise tiers offer security options. |

| Reasoning on Long Texts | Superior. Designed for lengthy, complex analytical tasks (500K-1M token context). | Excellent, but limited by smaller context windows than Claude’s enterprise tiers. |

| Agentic Coding Workflow | Developer-in-the-loop (Claude Code CLI), focused on deep codebase analysis and local integration. | Autonomous delegation (Codex Agent), focused on cloud-based, asynchronous task execution. |

Businesses must evaluate whether their priority is deep-contextual analysis on massive proprietary datasets (favoring Claude) or broad, flexible integration and real-time multimodal interaction across dozens of apps (favoring ChatGPT). For advanced automation, the best solution often integrates both, with n8n or a similar platform acting as the intelligent orchestration layer (The core idea is always a connected ecosystem).

The Strategic Verdict: Choosing the Right AI Partner for Your Business Automation Needs

The strategic verdict in the Claude vs. ChatGPT debate is not a simple "winner takes all" conclusion; it is a choice based on alignment with specific business objectives and risk tolerance. As a solution-oriented organization, Idea Forge Studios guides clients toward the model that best fulfills their most critical needs.

Choose Claude if your business prioritizes:

- Deep Enterprise Data Analysis: Your primary AI use case involves processing, summarizing, or reasoning over massive volumes of proprietary text or documentation (e.g., legal, financial, or engineering reports).

- Security and Compliance: You have strict requirements for data privacy, want stronger assurances against model retraining on your data, or require deployment on managed cloud services like AWS Bedrock or Google Cloud Vertex AI.

- Guided Coding and Refactoring: Your engineering team needs an AI partner for complex, multi-file code analysis and refactoring in an interactive, developer-guided environment.

Choose ChatGPT if your business prioritizes:

- Broad Versatility and Multimodality: You need an all-purpose AI that can handle text, images, and potentially audio, and you want access to the broadest third-party plugin/GPT ecosystem.

- Autonomous Workflow Delegation: Your goal is to offload entire coding or analytical tasks to an asynchronous cloud-based agent that automatically handles pull requests and isolated execution.

- Quick Integration and Extensive Tools: You need fast, wide-ranging integration with existing business applications and want to leverage the massive, mature developer ecosystem surrounding OpenAI.

For businesses seeking comprehensive digital solutions, the true power lies in the strategic deployment of these technologies, often requiring an orchestration layer—whether that’s a custom Python backend or a platform like n8n—to leverage the best features of both LLMs. The goal is always to create a reliable, efficient, and secure automation ecosystem that drives growth and improves operational efficiency, and a clear understanding of the models’ strengths and weaknesses is the first step toward achieving that objective.

Translate Strategy into Action: Build Your AI Automation Ecosystem

The choice between Claude and ChatGPT is a strategic architectural decision. Idea Forge Studios specializes in orchestrating complex, secure, and high-value AI solutions—from agentic coding to custom CRM and n8n workflows. We guide you past the debate to reliable implementation.

Schedule Your Strategic AI Consultation Now

Ready to talk details? Contact our team directly:

Call us at (980) 322-4500 |

Email us at info@ideaforgestudios.com

Get Social